动态存储是什么

Openshift持久化存储(PV)有两种,一种是静态的,另一种是动态。

- 静态存储:需要管理员手动创建PV,供PVC挂载调用

- 动态存储:通过一个称作 Storage Class的对象由存储系统根据PVC的要求自动创建。

StorageClass是什么

- StorageClass是Openshfit中的一个资源对象,它主要用于描述请求的存储,并提供按需传递动态预配置存储的参数的方法。

- StorageClass对象还可以用作控制不同级别的存储和对存储的访问的管理机制。

- 有了StorageClass后,管理员无需手动创建PV。Openshift的使用者在创建PVC时只需要指定StorageClass,会自动按照对应的StorageClass配置,调用对应的Dynamic provisioning来创建需要的存储

没有StorageClass时代,如何使用NFS

每次需要手动创建PV,一句话:麻烦。

StorageClass时代来了

一次配置,永久自动,无需手动创建PV,一句话:方便。

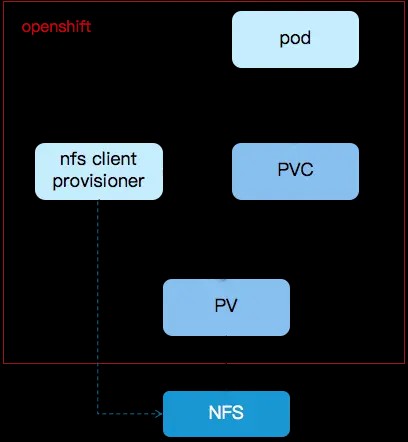

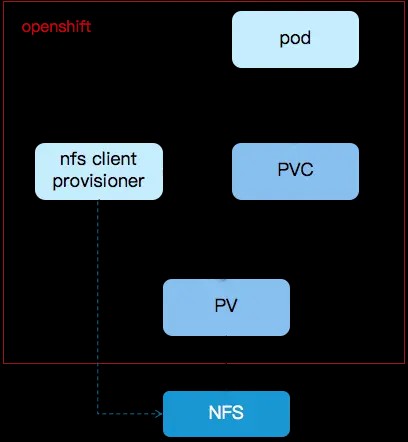

NFS Provisioner原理

- 新建PVC时,指定为默认驱动,或者指定storageclass为nfs storage

- 运行nfs client provisioner的pod会根据配置,在共享的NFS目录下创建新的文件夹,同时创建新的PV指向该文件夹

- 将新建的PVC与2中新建的PV关联,完成PVC的创建

- 该PVC就可以被调用的Pod挂载了。

NFS StorageClass具体配置步骤

- 准备NFS服务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| $ yum install nfs -y

$ mkdir -p /nfsdata/share

$ chown nfsnobody:nfsnobody /nfsdata/share

$ chmod 700 /nfsdata/share

$

$ iptables -A INPUT -p tcp --dport 111 -j ACCEPT

$ iptables -A INPUT -p udp --dport 111 -j ACCEPT

$ iptables -A INPUT -p tcp --dport 2049 -j ACCEPT

$ iptables -A INPUT -p udp --dport 2049 -j ACCEPT

$

$ echo "/nfsdata/share *(rw,async,no_root_squash)" >> /etc/exports

$ exportfs -a

$ showmount -e

$

$ systemctl restart nfs

|

- 确定Provisioner安装的project(默认为default)

如果使用default project的话

如果希望将它部署在自定义的project中,则新建project

1

| $ oc new-project nfs-provisoner

|

- 如果安装的project不是default的话,需要更改配置rbac.yaml,再设置权限

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

| $ cat rbac.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

$ NAMESPACE=`oc project -q`

$ sed -i'' "s/namespace:.*/namespace: $NAMESPACE/g" ./deploy/rbac.yaml

$ oc create -f deploy/rbac.yaml

$ oc adm policy add-scc-to-user hostmount-anyuid system:serviceaccount:$NAMESPACE:nfs-client-provisioner

|

- 更新deploy/deployment.yaml,设置NFS Server的配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

| $ cat << EOF | oc create -f -

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: docker.io/xhuaustc/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: <YOUR NFS SERVER HOSTNAME>

- name: NFS_PATH

value: /nfsdata/share

volumes:

- name: nfs-client-root

nfs:

server: <YOUR NFS SERVER HOSTNAME>

path: /nfsdata/share

EOF

|

- 创建storageclass

1

2

3

4

5

6

7

8

9

10

11

12

| $ cat << EOF | oc create -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true" # 设置该storageclass为PVC创建时默认使用的存储机制

provisioner: fuseim.pri/ifs # 匹配deployment中的环境变量'PROVISIONER_NAME'

parameters:

archiveOnDelete: "true" # "false" 删除PVC时不会保留数据,"true"将保留PVC数据

reclaimPolicy: Delete

EOF

|

NFS StorageClass使用

- 创建PVC

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| $ cat << EOF | oc create -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

volume.beta.kubernetes.io/storage-class: managed-nfs-storage

volume.beta.kubernetes.io/storage-provisioner: fuseim.pri/ifs

name: testpvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

EOF

|

如果storageclass中设置了storageclass.kubernetes.io/is-default-class: "true" ,可以更简单地创建PVC

1

2

3

4

5

6

7

8

9

10

11

12

| $ cat << EOF | oc create -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: hello-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

EOF

|

- 查看PVC

1

2

3

4

5

6

| $ oc get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-fb952566-4bed-11e9-9007-525400ad3b43 1Gi RWO Delete Bound test/hello-pvc managed-nfs-storage 5m

$ oc get pvc

hello-pvc Bound pvc-fb952566-4bed-11e9-9007-525400ad3b43 1Gi RWO managed-nfs-storage 4m

|

- 如果storageclass中设置了

archiveOnDelete: "true" ,在删除PVC时,会将数据目录归档

1

2

3

4

5

6

7

| $ ls /nfsdata/share

test-hello-pvc-pvc-fb952566-4bed-11e9-9007-525400ad3b43

$ oc delete pvc hello-pvc

$ ls /nfsdata/share

archived-test-hello-pvc-pvc-fb952566-4bed-11e9-9007-525400ad3b43

$

|

总结

有了NFS StorageClass后,创建存储就非常简单方便了。

Openshift NFS动态存储代码 https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client

引用自:https://mp.weixin.qq.com/s/HgDCDgYjkX5en7ORNeG0yA